- The Hemingway Report

- Posts

- #81: Using GenAI to scale personalised exposure therapy

#81: Using GenAI to scale personalised exposure therapy

Adam Hutchinson shares learnings from two years of using Gen AI video in mental healthcare

Hi friend,

Adam Hutchinson was on holiday in Okiwi Bay, New Zealand, when he first got the idea.

It was January 2023 and GenAI models were exploding into public consciousness. While most of us were using them to make funny videos (well, at least that’s what I was doing), Adam was staring at a different problem.

His company, oVRcome, had already transformed exposure therapy by using VR on smartphones. But Adam was now trying to figure out how to make the treatment more effective whilst also making it even more accessible.

Two years later, Adam and oVRcome are successfully using GenAI to create personalised VR experiences that are both safe and effective. They’ve been able to move past hype to create something that is actually working.

In this report, we go deep into how Adam has implemented GenAI in his product, how he designs for safety, how he thinks about commercialising this innovation and the lessons he’s learned along the way.

If you like this kind of story (ones where I share real learnings from innovators), I’ll write more of them. So, let me know.

Alright, let’s get into it!

Join The Hemingway Community

Adam is a member of The Hemingway Community. If you’d like to join him and over 300 founders, researchers, clinicians and operators, you should check it out. Membership gets you access to exclusive content, private IRL events, online expert sessions and our vetted slack group.

Filming 1,000 exposure scenes

Adam started oVRcome because of his own experience with severe social anxiety. When he was 16, his anxiety was so bad that he had to drop out of school and go to work on a farm. Years later, after building two other companies, Adam returned to solve his own problem, founding oVRcome as a way to scale access to exposure therapy using VR.

We started by taking a proven treatment, exposure therapy, and removing the four barriers that kept most people from accessing it

“These were the cost of treatment, location of the user, long waiting lists for therapists, and stigma associated with seeking treatment for mental health,” says Adam.

The three traditional forms of exposure therapy all had limitations. In-vivo (live) exposure is time-intensive, expensive and requires a lot of logistical effort. Assigning in-vivo exposure therapy as homework typically has low adherence. Another option is to guide a patient through their imagination to create a simulated exposure. But that depends on the client’s ability to remember specific details and generate them in their mind.

Adam saw VR as a way to create a more accessible, lower-cost treatment. He started by using a special camera to film scenes in VR and making those available to clinicians and clients on smartphones and cardboard headsets. It worked well. oVRcome was adopted by over 1,400 clinicians and published two peer-reviewed trials showing that their filmed VR exposure therapy could match traditional in-person treatment.

The oVRcome team filming a scene with an Insta360 Pro at Cashmere High School

“By delivering filmed VR through a smartphone and a simple cardboard headset, we were able to match the outcomes of traditional exposure therapy at a fraction of the cost and finally make it scalable,” says Adam.

Over the following years, they physically filmed over 1,000 exposure scenes and made them available to clinicians and clients.

But at the start of 2023, there were still two questions nagging Adam. First, how could they continue creating more exposure scenes without their costs ballooning? And second, knowing that outcomes improve when exposures are personalised, how could they make more personalised content?

That's when the idea landed: What if they used these new GenAI models to generate their own VR experiences? What would that do for access, scalability and outcomes?

While the idea was fascinating, it came with a lot of unknowns. Could synthetic video trigger a genuine biological response? Could AI make a scene real enough that it could induce the right levels of anxiety in a user? Could this be done in a safe and ethical way? And even if they could do all this, how would clinicians and clients react?

I’m interested in details. So I wanted to know exactly what Adam did after he had his epiphany in Okiwi Bay. The first thing he did was to get his team on board.

Your first job as a founder is to align your team behind a shared vision

“I wanted the team to be as energised as I was, and that meant bringing them into the problem. I wanted them to see the vision and the potential," says Adam. "Luckily, I work with people who are just as serious about fixing mental health as I am, and once they saw it, we were all in."

Many great businesses are built on the back of a technological inflection, but recognising that point in time is a skill. The only way to do it is to be close to the technology and continue playing with it. This is what Adam and his team were doing: they’d regularly run little experiments with the AI to see if it could create realistic and suitable VR experiences.

But it took a few false starts before Adam and the team saw progress. The early experiments simply didn't work. The models weren't ready yet. The quality wasn't there. The team would generate scenes that looked passable in screenshots but fell apart in motion or lacked the sensory precision needed to maintain immersion.

Then came the breakthrough moment.

The indistinguishability surprise

One day, Adam and his team recognised that the models were now capable of creating some pretty cool scenes. So they ran a pilot and generated some exposures for clinicians and clients. When they got the data back from this pilot with the University of Canterbury, it showed that their AI-generated content had produced significantly higher scores on the Subjective Units of Distress Scale (SUDS) than filmed content. Synthetic content was not only able to trigger a biological response similar to filmed content, it was capable of surpassing it.

Screenshots from some of oVRcome’s exposure scenes. The one on the left is filmed, the one on the right is AI.

“To be honest, we expected users to notice the difference between VR and filmed scenes immediately”, says Adam. “Instead, we learned that in many cases, they couldn't. Qualitative feedback showed that nearly half of the AI videos were not identified as AI at all.”

While more evidence is still needed, early signs are promising that this could be an effective way to deliver more personalised exposure therapy at scale.

Adam also gained one of his first non-intuitive insights in this whole process: perfect graphics were actually much less important than relevant triggers. Once a user is emotionally engaged in their fear hierarchy, their brain fills in the gaps.

Building a GenAI Video generation system

Again, I wanted details. So I asked Adam lots of questions about how his product actually works. Here’s the system oVRcome built.

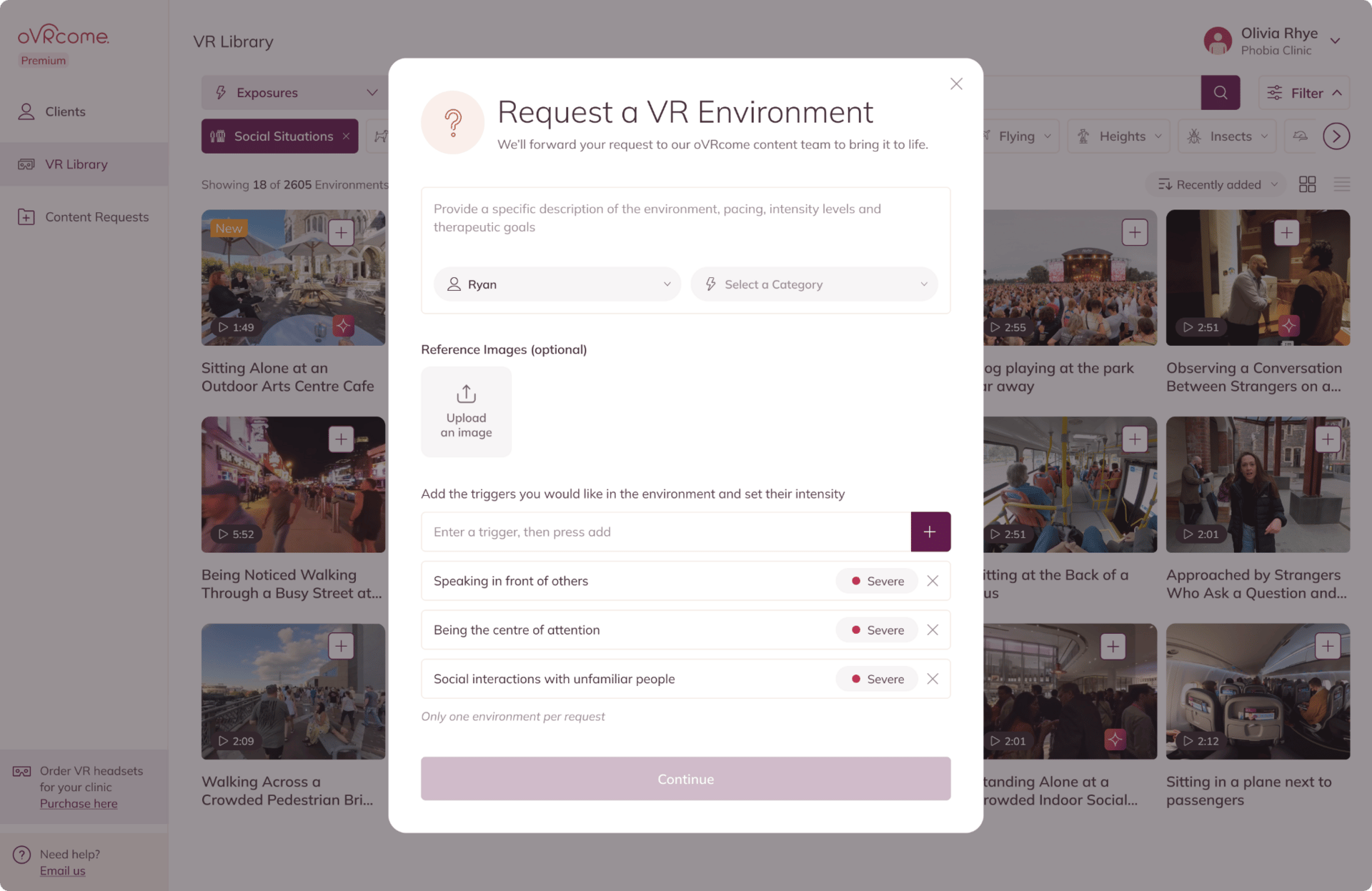

First, a clinician opens their oVRcome portal and browses the existing library of filmed VR scenarios. If they can't find one that matches their patient's specific trigger they can request custom content directly from the oVRcome interface.

The portal asks structured questions: What's the trigger? What intensity level? Does the scene need to match a specific location? The clinician can also upload reference photos if needed.

How a clinician requests a VR exposure scene from oVRcome

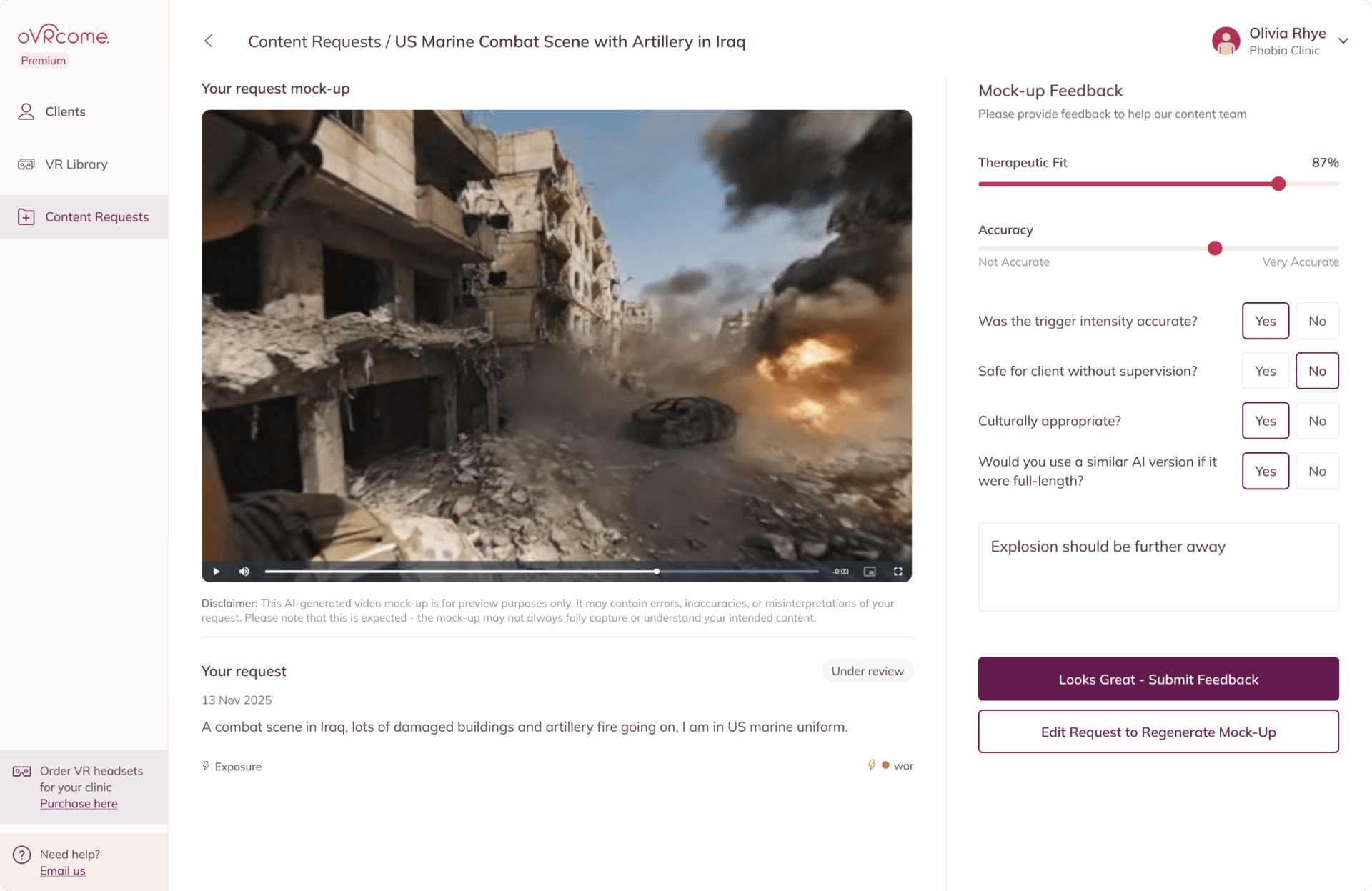

After a clinician requests a scene, a preview is generated immediately. The clinician gives feedback on whether it meets the therapeutic goal for their client and if they would use it in practice.

The oVRcome AI engine then takes three inputs: what the clinician asked for, any reference material the clinician provides and oVRcome’s structured clinical rules.

This generates a very specific prompt, which is then fed into a generative video model, currently Sora or Veo3, which creates the base scene for the exposure. The oVRcome team then run a quality and safety check and either iterate on the scene or, if they’re satisfied, move it into the clinician’s portal for them to use.

The AI generates the visuals, the clinician sets the boundaries, and oVRcome ensures it stays clinically appropriate and safe. The clinician then controls how it's used with clients, delivering the treatment either in-clinic or remotely via smartphone with a simple cardboard viewer. The entire process takes 3-5 days.

“The process is already faster and more responsive than filming, and we are streamlining it continuously. The long-term goal is full automation by 2026, so clinicians can request, review and deploy personalised exposures in as close to real time as possible,” says Adam.

The stakes are different in VR (and mental health)

Most applications of AI in mental health are text-based. While these text-based interventions are not without risk, VR is different.

"The risk profile of VR is fundamentally different from a chatbot," explains Adam. "In VR, 'hallucinations' are sensory. If the physics of a room break, or an object warps unnaturally, it triggers a visceral rejection in the brain - often causing nausea or confusion. The bar for safety is higher because we are engaging the user's visual and vestibular systems, not just their linguistic processing," he says.

oVRcome designs for safety by using each individual clinician’s guardrails, combined with their own structured clinical rules and review loops. As they’ve grown, they’ve used data from clinicians to refine their prompts and create more appropriate scenes.

“If you type a prompt into a standard video generator, the most you can expect is to hope for a therapeutic result. Consistent therapeutic results require a layer of clinical constraints,” explains Adam. “We are able to do this because we utilise data and feedback from over 1400 clinicians. This dataset allows us to define exactly what constitutes a safe exposure versus a harmful one, and what constitutes an optimal exposure for each individual. We can constrain the AI to stay within those therapeutic boundaries and within the optimisation zone.”

In his five years of building oVRcome, Adam learned a few lessons.

Lesson 1: The tortoise beats the hare

Founders want to move fast. It can be easy to get frustrated when you see other businesses raising money or landing new customers. But Adam firmly believes that good founders must resist that frustration and ensure they respect the guardrails and demands of the system.

Building in mental health is not like building in other sectors. The guardrails are there for a reason and the sooner you respect them, the faster you actually move.

“HIPAA, clinical rigor, regulators, ethics committees, all of it can feel slow compared to consumer tech sprints, but rushing past them usually means you end up rebuilding later or harming trust with clinicians and patients. The only way to create something durable and genuinely helpful is to embrace the constraints, learn the system, and solve the slow problems properly.”

It is not the fastest path, but it is the only path that compounds over time

Lesson 2: In VR, deliver precision to ensure immersion

Adam learned that one of the most important things in VR exposure therapy is to deliver relevant triggers to the user. But he also learned that for exposure therapy to work, the user must feel present in the environment. This actually doesn’t mean delivering 4K quality scenes, but it does mean using precision in scene generation that provides the visual fidelity that encourages immersion.

It’s like reading a book. If the details are rich and accurate, you stay lost in the story.

“If the author suddenly uses a word that doesn't fit the era, or a character acts illogically, the reader is pulled out of the narrative. In VR, this effect is immediate. If a spider moves like a cat, or a shadow falls in the wrong direction, the illusion breaks, and the therapeutic anxiety (SUDS) evaporates,” explains Adam.

Adam also learned that AI models naturally drift toward generic perfection - they make cobblestone roads look too even, or boarded-up windows and open-air market goods look like a repetitive, identical pattern. You’ve probably seen some AI slop videos like this. Adam’s team learned to focus on catching these "cleanliness" errors and adjusting their prompts over time to avoid them.

Lesson 3: Use user feedback to deliver contextual accuracy

A challenge more difficult than ensuring visual fidelity is replicating lived experience details that only the patient knows.

“We had this Veteran participant, and for him, it wasn't enough to simply show a generic nighttime convoy. He pointed out details the AI (and even our clinicians) initially missed: the vehicles had to be in single file; the flares had to be specifically handheld and shot out the back; the weapon had to be an M4 machine gun, not any other kind of rifle. If the AI renders a generic gun or a disorganised convoy, it is no different from a video game. When it renders the specific M4 and the correct tactical formation, it becomes a memory trigger.”

Generating any kind of battlefield or combat exposure using filmed content is incredibly difficult. But with GenAI, oVRcome can create these scenes and have them tailored to the specific context of the client. This can be super helpful in the treatment of many disorders, including PTSD.

Lesson 4: Know your limits

It’s critical to recognise the limits of your innovation.

Adam noted a few limits in using GenAI for VR. “Creating experiences for vulnerable populations (e.g., children) is much harder. Also, AI struggles with generating realistic crowd scenes. Creating exposures for social anxiety is also challenging. For example, generating a realistic conversation with a boss where they show subtle disappointment requires a level of micro-expression fidelity that AI video isn't quite ready for yet. The process also takes some time (a matter of days), and most models can only generate short videos (about eight seconds).“

Staying within the limits of your innovation allows you to build more trust with clinicians and buyers. As the technology advances, your innovation can too.

Translating innovation to commercial impact

While Adam’s mission is to make exposure treatment more impactful and more accessible, he knows that to maximise the impact of his innovation, it must be bound in a sustainable commercial model.

I’ve been thinking about how this innovation impacts the business of oVRcome. First, it promises a lower-cost method for delivering exposure therapy. This is important. The reality is that if you’re not lowering costs and delivering ROI to payers, it’s very hard to get adopted. But perhaps even more importantly, this innovation has the potential to improve response rates through the use of these highly personalised exposures. One of my major predictions for 2026 is that the mental health system will shift from focusing on access to focusing on quality and the actual outcomes delivered by providers. If you can deliver better outcomes, and prove it, you have a better chance of having a sustainable business model.

Adam is betting hard on value-based deals with payers to get paid for this innovation.

If we succeed as a startup, it's because we will be solving a payer's needs

Defensibility

After talking with Adam, I was wondering if this innovation is defensible. I think it is, for two reasons.

First, it will benefit from scale economies. As oVRcome gets more users, its incremental cost to serve those users should go down. Their filmed content can be amortised across their user base (like Netflix). But also, it has a network effect - each new AI-generated scene becomes available (with clinician consent) to the rest of the clinicians using the platform. Of course, scale economies like this are the promise of any digital intervention. The harder problem for these businesses tends to be solving for distribution and reimbursement.

Secondly, they have process power. You may recognise this term from Hamilton Hemler’s framework for enterprise value creation (if not, I’d recommend reading his book - it’s the only business book I ever recommend). Replicating oVRcome’s process is difficult and will only get harder. Every time they generate a scene and get clinician feedback, that data refines their logic and prompts. They have a specialised technical team that uses proprietary pre- and post-production methods to extend and lock the consistency, ensuring the world stays stable and seamless for the duration of the exposure. There’s significant value ingrained in that process - if you started today and tried to replicate what oVRcome do, it would be difficult.

Moving towards adaptive generation

Adam has some interesting thoughts on where this innovation could go in the future. He wants to speed up the time it takes to generate an exposure scene. But he also wants to move towards more adaptive generation.

"We are moving from generating videos between sessions to generating them during sessions," he says.

The goal is adaptive generation - where the VR environment changes based on the patient's response

“If a patient is coping well with a spider exposure, the system could automatically increase intensity - making the spider slightly larger or moving it closer. If they're overwhelmed, it dials back. This keeps the patient in the optimal therapeutic zone automatically," Adam explains.

To make this work, the team is integrating real-time physiological data directly into the experience: gaze tracking, pupil dilation, and heart rate variability. "We can detect 'safety behaviours' that a clinician might miss," says Adam. "For example, if the headset detects that the patient is closing their eyes or avoiding looking at the spider, the system knows the exposure isn't working effectively. We are building the capability for the AI to respond to these biological signals."

In 2025, oVRcome was selected as part of the Wellcome Trust accelerator for GenAI in mental health and collaborated with the Google DeepMind team.

Adam’s longer-term plan is to get even more validation for oVRcome’s solution and scale it around the world. "We are moving beyond pilots to large-scale validation," Adam says.

"The ultimate goal is to move this technology from an experimental tool to a reimbursable medical device that can be prescribed as standard of care."

That’s all for this week. Many thanks to Adam for all the time he spent with me over the last few weeks. If you liked this report, please let me know.

Until next time…

Keep fighting the good fight!

Steve

Founder of Hemingway

Notes:

[1] Learn more about oVRcome

[2] Connect with Adam

If you’d like to join Adam and over 300 founders, researchers, clinicians and operators, in the Hemingway Community, you can learn more here. Membership gets you access to exclusive content, private IRL events, online expert sessions and our vetted Slack group.

Reply